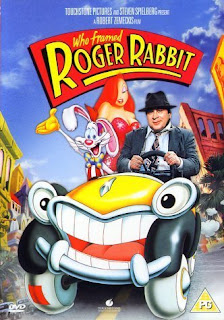

Skinning Roger Rabbit AKA Are we there yet?

I was lucky enough to grow up in the era when home computer and consoles were new, and movies using CG was something worth talking about if anybody actually used managed to actually used it (i'm looking at you Max Headroom and Tron, both designed to look like CG but mostly used old fashioned effects as computers weren't fast enough). One of my favourite movies didn't (AFAIK) use CG but now would use it a lot, Who Framed Roger Rabbit . Roger Rabbit is a clever comedy/who dun it about a murder with the main suspect being the eponymous Roger Rabbit. The twist is that Roger Rabbit is a rabbit, a cartoon rabbit. The world is set up so that Hollywood has a section called Toon Town, where cartoons actually exist. Bugs Bunny, Micky Mouse actually exist and act in TV shows and movies just like other actors. The star (apart from Roger) is Eddie Valiant played by Bob Hoskins who is a classic 20/30s grizzled private eye who hates Toons after one kills his partner. The part ...